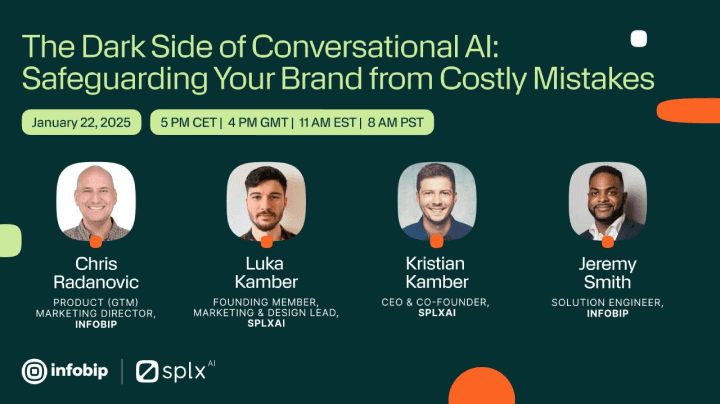

Webinar

The Dark Side of Conversational AI: Safeguarding Your Brand from Costly Mistakes

Join SplxAI & Infobip to learn how to protect your brand from Conversational AI risks. Learn AI security best practices and securely boost customer trust.

Chris Radanovic

Jeremy Smith

DATE

Jan 22, 2025

TIME & LENGTH

1 hour

STATUS

Available on demand

LANGUAGE

English

This exclusive webinar with Infobip and SplxAI delves into the critical importance of AI security for Conversational AI applications built on top of LLMs (Large Language Models). Some of the main topics covered in this session include: examples of how jailbreaks, prompt injections, and hallucinations can cause significant damage to an organization's brand reputation, legal penalties organizations have to face with increasing AI regulation if sensitive data is leaked, and more.

That's why implementing continuous risk assessment procedures is crucial to keeping GenAI systems secure

AI red teaming ensures continuous testing of dynamically evolving LLM-based applications, helping identify holes that traditional, infrequent pentesting efforts often miss.

Automated risk assessments reveal whether your AI firewalls and guardrails are configured properly, preventing a hostile actor’s attempts to exploit your assisstant's weaknesses.

Thorough and continuous evaluation of Conversational AI mitigates the risk of hallucinations and misinformation by detecting vulnerabilities early, maintaining public trust, and protecting sensitive data.