Webinar

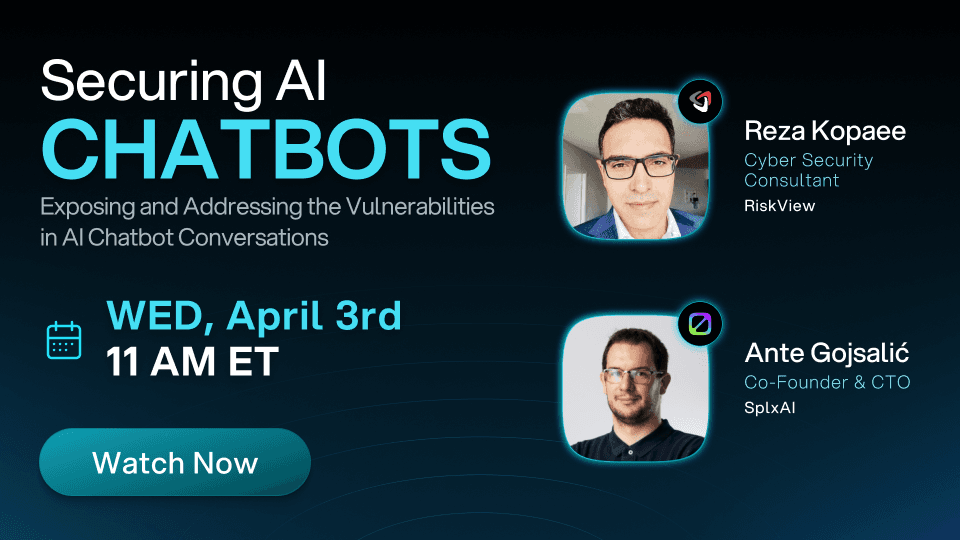

Securing AI Chatbots: Vulnerabilities in AI Chatbot Conversations

Discover key vulnerabilities in AI chatbots with Ante Gojsalić and Reza Kopaee. Learn to secure against adversarial attacks and protect your GenAI systems.

Ante Gojsalić

Reza Kopaee

DATE

Apr 3, 2024

TIME & LENGTH

36 min

STATUS

Available on demand

LANGUAGE

English

This webinar features Ante Gojsalić, Co-Founder and CTO at SplxAI, together with Reza Kopaee, Cyber Security Consultant at RiskView, and discusses critical security issues surrounding conversational AI. With the rapid growth of Generative AI (GenAI) applications and Large Language Model (LLM) technology, this session highlights the risks and challenges of deploying these systems in production environments, emphasizing the need for proactive measures to secure AI chatbot interactions against sophisticated threats. As more organizations adopt AI-driven conversational tools, the focus on robust AI chatbot security becomes essential to prevent adversarial attacks, data leakage, and system compromise.

How can enterprises adopt GenAI securely?

Over 90% of CISOs we spoke to reported that GenAI applications, including AI chatbots, are not yet production-ready, reflecting significant security concerns.

Guardrails are crucial but can cause revenue loss if misconfigured, as overly strict settings may block legitimate queries and frustrate users.

AI red teaming is essential in GenAI development to uncover vulnerabilities early, enhancing security before deployment.