Podcast

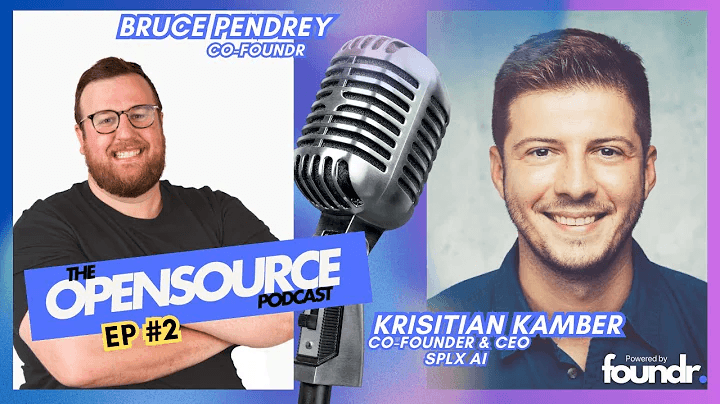

The Opensource Podcast: Automated and Continuous Red Teaming of AI Agents

Learn how SplxAI tackles AI security challenges through automated red teaming and addresses data privacy and compliance issues for generative AI solutions.

Bruce Pendrey

Kristian Kamber

DATE

Oct 7, 2024

TIME & LENGTH

30 min

STATUS

Available on demand

LANGUAGE

English

This Opensource Podcast episode features Bruce Pendrey, Co-Founder at foundr, and Kristian Kamber, Co-Founder ant CEO at SplxAI and explores key AI security challenges and solutions, focusing on vulnerabilities in chatbots, data privacy risks, and the automation of AI red teaming. SplxAI's approach is highlighted to bridge the gaps between AI development and security through compliance-driven frameworks, advanced testing tools, and global adoption strategies. Key topics include mitigating threats in generative AI, addressing regulatory requirements, and leveraging automation to enhance efficiency and scalability in securing AI systems.

How Automation is redefining AI Security

AI Vulnerabilities: Generative AI systems face critical risks like chatbot data leaks, prompt injections, and expanding attack surfaces.

Automated Red Teaming: SplxAI revolutionizes AI security by automating up to 95% of penetration testing, saving time and improving accuracy.

Regulatory Compliance: Ensuring AI adoption aligns with data privacy laws and security standards is a key driver for securing production systems.