Podcast

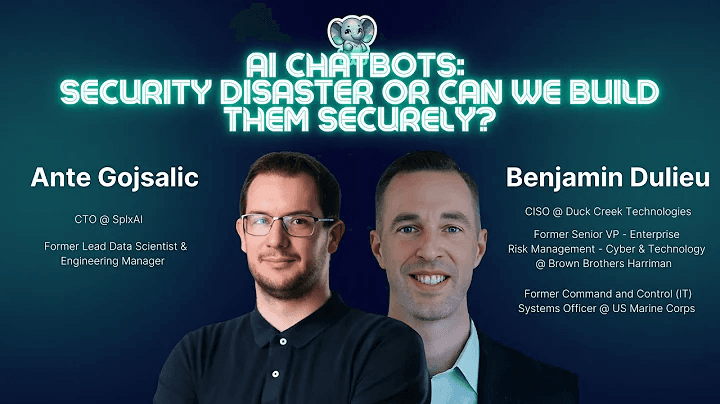

AI Chatbots: Security Disaster or Can We Build them Securely?

Explore AI chatbot security, monitoring, red teaming, and data leakage risks in this expert-led podcast with tips on building and deploying secure AI systems.

Ante Gojsalić

Benjamin Dulieu

DATE

Oct 15, 2024

TIME & LENGTH

50 min

STATUS

Available on demand

LANGUAGE

English

This podcast episode of The Elephant in AppSec featuring Ante Gojsalic, Co-Founder and CTO at SplxAI, Benajim Dulieu, CIO & CISO at Duck Creek Technologies, and Alexandra Charikova, Growth Marketing Manager at Escape, delves into the challenges and advancements in AI chatbot security, exploring risks like data leakage, multilingual vulnerabilities, and the differences between open source and proprietary security solutions. It highlights the importance of embedding security early in AI development, leveraging tools to continuously assess and monitor GenAI chatbots for threats. Real-world case studies and actionable insights emphasize how organizations can proactively mitigate risks, secure sensitive data, and foster a strong security culture to unlock the full potential of AI systems.

Securing AI Chatbots: Managing Risks and Unlocking Potential

Proactive Risk Mitigation: Integrating security and privacy measures early is crucial to prevent vulnerabilities like data leakage and social engineering attacks.

Continuous Monitoring: Regular vulnerability scans and red teaming exercises ensure systems adapt to evolving threats and maintain a robust security posture.

Cultural Shift in Security: Organizations must embed security into the development lifecycle, fostering collaboration between technical and business teams for resilient AI systems.